While large language models (LLMs) demonstrate remarkable capabilities, they often confidently make logical and factual mistakes, which can invalidate the entire solution. A common approach is the Best-of-N method, where N candidate solutions generated by the LLM are ranked by a verifier, and the best one is selected. While LLM-based verifiers are typically trained as discriminative classifiers to score solutions, they do not utilize the text generation capabilities of pretrained LLMs.

To overcome this limitation, researchers from Google have instead proposed training verifiers using the ubiquitous next-token prediction objective, jointly on verification and solution generation. Compared to standard verifiers, such generative verifiers (GenRM) can benefit from several advantages of LLMs: they integrate seamlessly with instruction tuning, enable chain-of-thought reasoning, and can utilize additional inference-time computation via majority voting for better verification.

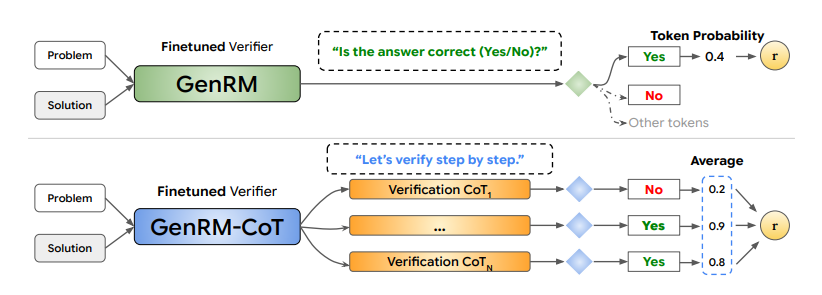

Generative verifiers, namely GenRM and GenRM-CoT, start with a given question and a candidate solution, GenRM directly finetunes an LLM to answer the question ‘Is the answer correct (Yes/No)?’ via SFT on the next-token response corresponding to either ‘Yes’ or ‘No’. During inference, the verifier score is obtained by extracting the probability of the ‘Yes’ token. In comparison, GenRM-CoT finetunes a LLM to produce verification chain-of-thought (CoT) rationale before yielding the final Yes/No token. At test-time, multiple samples of CoT are rational and use majority voting to compute the average probability of ‘Yes’, enabling GenRM-CoT to utilize additional inference-compute for better verification.

Gemma-based was verified on algorithmic and grade-school math reasoning tasks, and GenRM outperforms discriminative verifiers and LLM-asa-Judge, showing a 16−64% improvement in the percentage of problems solved with Best-of-N. Furthermore, GenRM was able to scale favorably across dataset size, model capacity, and inference-time compute.

Paper : https://arxiv.org/pdf/2408.15240